There are those that look at things the way they are, and ask why? I dream of things that never were, and ask why not?

George Bernard Shaw

Restoring faith in innovation

There is a tension between those who cling to the status quo and innovators who constantly chase new possibilities. History has shown us that the latter will dominate the marketplace, but for how long? In recent years, leading innovative companies have made headlines for disseminating scientific misinformation, eliminating teams devoted to harm prevention, releasing politically tone-deaf messages, and many other instances of recklessness. Coupled with the introduction of artificial intelligence and other emerging technologies, serious missteps in ethics, governance, and culture have caused the schism between the agents of change and opponents of it to widen substantially. These ethical blunders, plus outright paranoia, have led to cries for stricter regulation and for companies to pull back from exploration. We believe that the answer isn’t to stop innovating or to give in to the naysayers of progress for fear of pitchforks. The best way to right our course and stay ahead of the unintended consequences of new technology is to let Purpose guide the way.

But why listen to us?

We have over 65 years’ combined experience in researching best innovation practices and advising companies, organizations, and governments on how to adopt and embed them in an ethical manner. We have developed new approaches for designing engaging experiences and have been at the helm of building inclusive, sustainable, and safe AI systems. Our research has leveraged divergent thinking by studying and integrating neuroscience, game theory, behavioral economics, ethics, and paleoanthropology, as well as firsthand perspectives from working in tech. We are always searching for superior solutions and ask the hard questions. So, when the highly regarded “move fast and break things” (popularized by Meta) philosophy backfired, we had to understand why. In the heart of every innovator is a desire to make the world a better place, and our deepest concern is that we might make it worse. While we have always known that inventions need more than just good intentions, the urgency to realize them has never been greater. When it comes to new technologies, our innovation expertise shows us that Purpose, backed by rigor and accountability, is the remedy for getting closer to our goals while discovering their myriad of exciting applications.

Reality one

Innovation is accelerating at a faster pace than we can course-correct potential mistakes

Over the past decade, the speed and scale of innovation has increased exponentially, and the pandemic further accelerated its course. Moore’s Law tells us that innovation will continually become faster, cheaper, and better. Consumers have kept pace with this whirlwind of advancements, as tools are becoming widely adopted with staggering swiftness. It took 1,000 years for paper to reach Europe from China, 80 years for automobiles to be used by the masses, and 20 years for computers to become commonplace. Instagram, however, achieved 1 million active users in 2.5 months. ChatGPT hit the 1 million user mark in only five days. The almost instant virality of today’s innovations underscores how pressing it is to mitigate damaging effects from the onset, perhaps right at the conception and ideation stages.

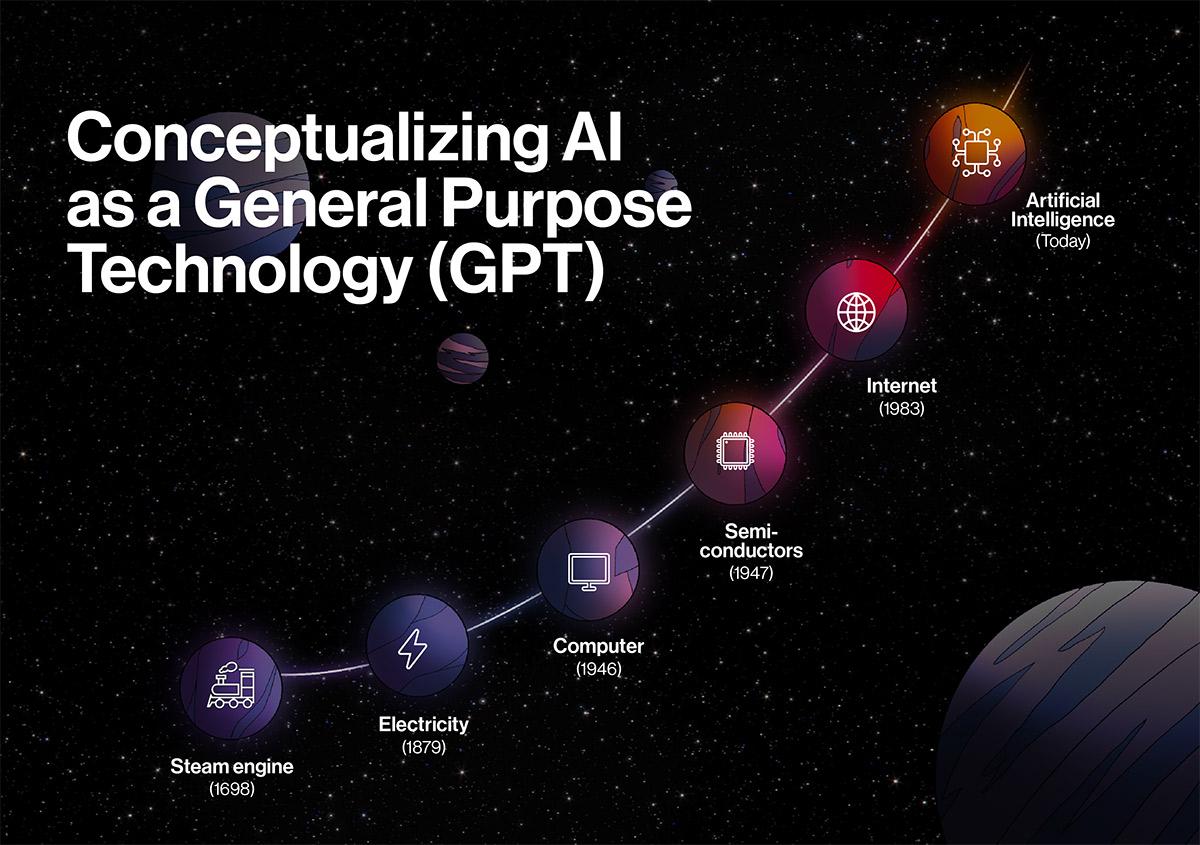

This caution is particularly necessary with GPTs. No, not ChatGPTs (Chat Generative Pre-trained Transformer), we’re talking about General-Purpose Technologies. A GPT is characterized by performing many functions, having substantial scope to expand those capabilities, and the power to create an impact so great that it can alter the way economies and societies operate. Electricity, computing, and the internet have been previous GPTs, and now, artificial intelligence, web 3, and the metaverse are simultaneously building the new frontier of how we live, work, and connect.

Although there’s massive opportunity in these spaces, there’s already been cases of these innovations gone awry. For example, despite ChatGPT receiving acclaim for being one of 2022’s most impressive technological innovations, it has already been mired in ethical conundrums. Most recently, OpenAI, the creators of ChatGPT, tried identifying and filtering troubling content to combat the output of toxic speech and biases that have plagued other generative A.I. This effort required humans to feed offensive textual descriptions into the generator. Beyond the content of these messages being traumatizing to begin with, the Kenyan workers outsourced to complete this task were paid less than $2 an hour. The intention to stop the circulation of this content may have been pure, but the solution that involved exploiting workers was not. Unfortunately, this tactic has a long history in the form of Ghost Work, penned by Mary L. Gray, that foregrounds how cheap human labor often underpins magical advances in technology, such as content moderation on social media. The complexity of innovation that is happening today is bound to create equally complex ethical dilemmas. It’s imperative we approach them with the correct mindset as we search for robust solutions.

Reality two

This amplifies the magnitude of unintended consequences

With every introduction of a general-purpose technology, we amplify the magnitude of implications. It only takes a few seconds for a computer to make a million mistakes. This is where status quo lovers rightfully start to worry. Business leaders would be wise to listen to what they have to say and adjust their approach. Many leaders are apt to trust technical experts and try to fix problems after they arise with PR and marketing initiatives. In reality, they’re ultimately responsible for the harm caused by their company’s innovation. With the speed of adoption and near whiplash of consequences for A.I. and other emerging technologies, CEOs cannot afford to push the responsibility on others.

It only takes a few seconds for a computer to make a million mistakes.

Just look at the case of social media. Many leaders and entire governments wrote off social media as superficial, until it had ramifications much greater than merely keeping kids glued to the screen for too long. With social media, we’ve witnessed the good and bad sides of algorithmic mass engagement. Interesting content attracts people to the platform, their “likes” and “shares” feed more data to the system, which then generates a more tailored experience—creating an ever-improving cycle of engagement. Sadly, not all highly promoted content is healthy. If we turn to what happened in Myanmar in 2017, Facebook’s algorithm facilitated the circulation of inflammatory, hateful content that led to a breakout of religious violence. Instead of banning this dialogue upfront or curbing the troubling conversation as it developed, Facebook responded to the conflict it helped incite by shutting down for three days. This solution worked, showing the connection between online foment and real-world violence.

These kinds of issues, along with personal data privacy and free speech concerns, present ongoing struggles with social media that will only become trickier with the debut of new GPTs. GPTs are quicker to cause negative repercussions and have second and third-order effects that can be detrimental in the long-term if not designed, implemented, and monitored in an ethical way. Tricia Wang, tech ethnographer and designer of equitable systems, also points to the implications of disrupting at scale: “when you are in charge of connectivity for a whole country or the world—breaking things is not okay anymore, because a lot of people get hurt.” Falling into the “move fast and break things” mantra modeled by Silicon Valley for the past 60 years is especially tempting with organizations feeling crunched to catch up to industry leaders; however, they can still move fast without making the mistake of considering ethics after-the-fact. It’s essential we commit to fixing things proactively if we are to be responsible stewards of tomorrow’s technologies.

Reality three

As a result, we can no longer afford to ‘move fast and break things’

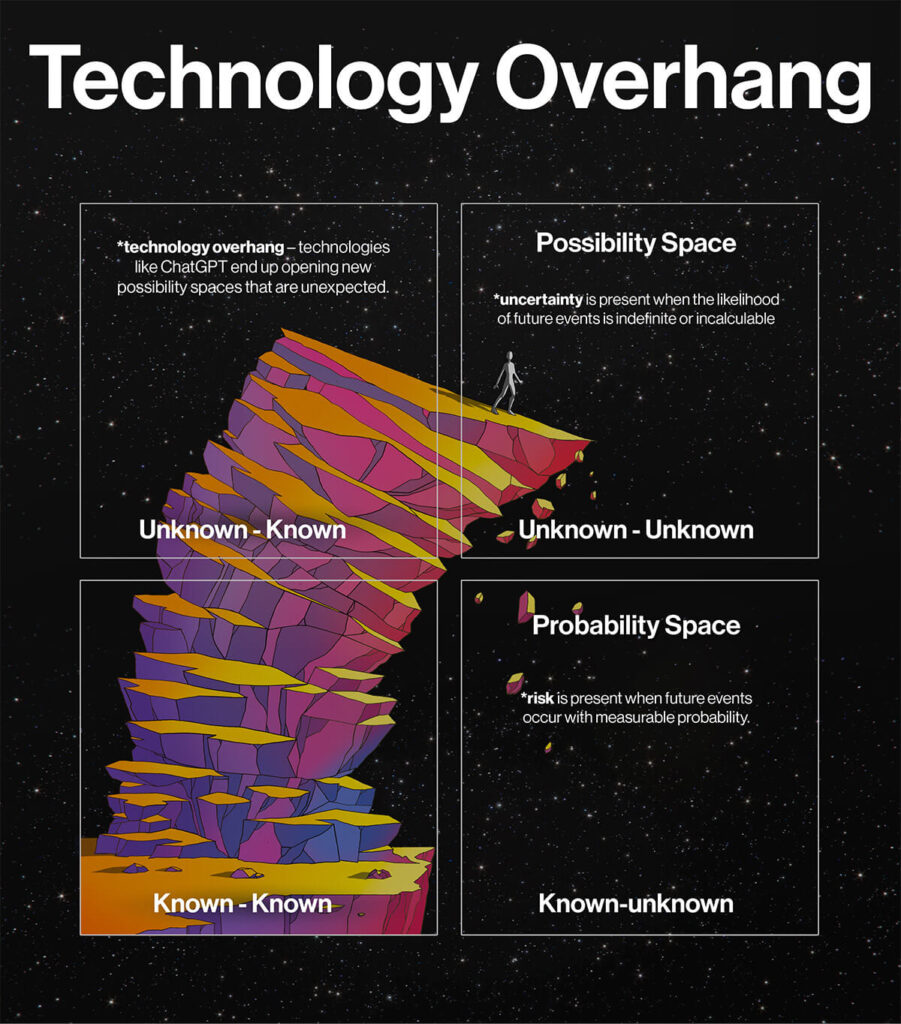

“Move fast and break things” is broken. But it’s not the only mindset that needs a fix; there are cracks in how we approach innovations’ unknowns, as well. Currently, we use a risk lens to predict and prepare for the unexpected. This perspective tells us to quantify the risks and put adequate safeguards in place to control for them. Although the risk lens is still a necessary and valuable approach, it’s time to widen the aperture. We can’t know, never mind quantify, the threats that will arise as we explore new technological frontiers. With massive capability overhang in systems like ChatGPT, the threat landscape becomes particularly nebulous.

It’s not just a matter of dealing with quantifiable risk, but also anticipating and navigating major uncertainty. Given how uncertainty cannot be assigned a probability, we need new tools and methodologies to explore these consequences. As we seek out the right approach, we can learn from other cases where technologies have created unforeseen damage.

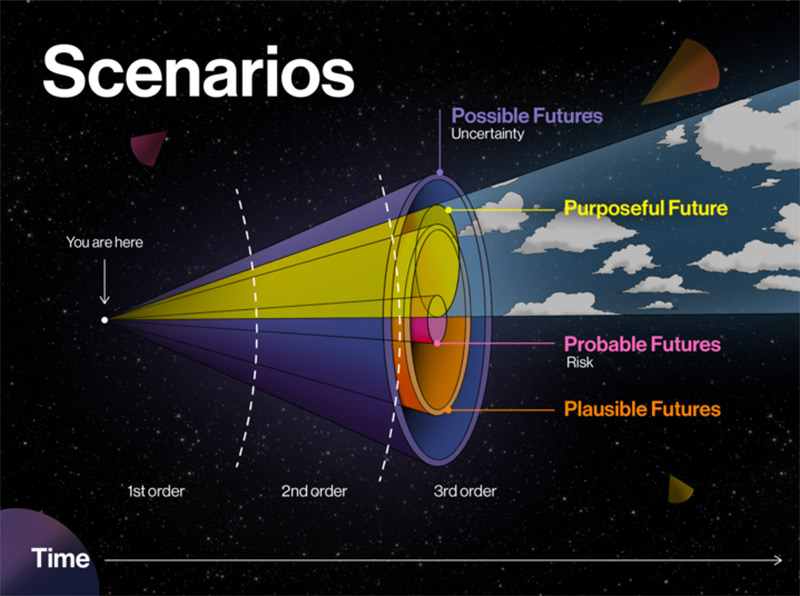

In retrospect, we actually could have seen many of these scenarios coming. Not from reading dystopian sci-fi, but from listening to the tech ethnographers, academics, and futurists who were researching the effects. It’s important to stay observant of macro signals developing as A.I.’s implications begin to play out on a wider level. Observant does not mean fearful. In fact, imagining and preparing for possible futures (e.g., through approaches like forecasting) will not only help companies navigate the uncertainty of innovation, but it will also make them feel more prepared when one of those possible futures becomes the present.

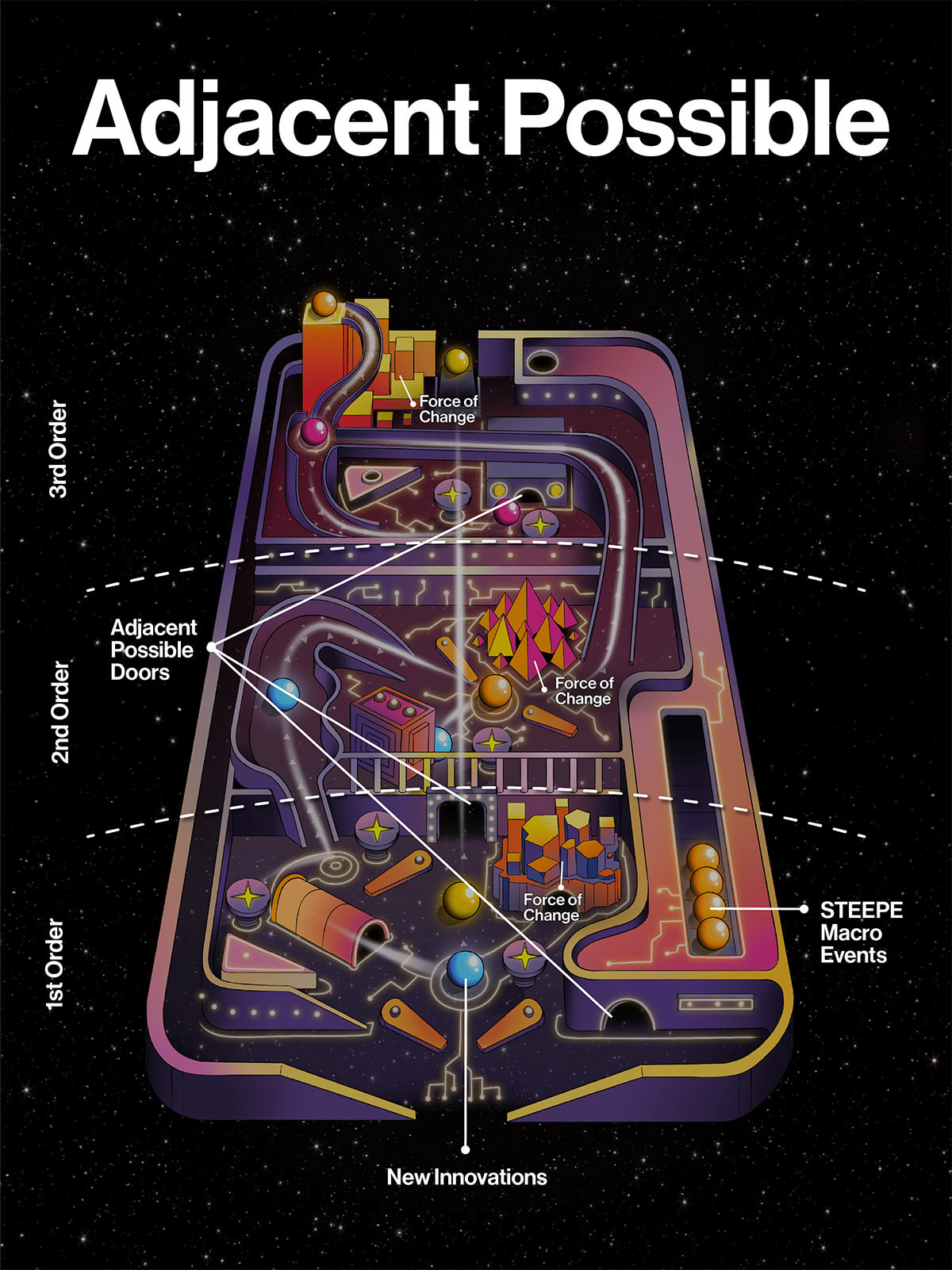

When exercising this lens of the possible, those with an affinity for innovation often jump to a utopian world where technology can cure all of society’s problems and make life tremendously easier. On the other hand, those fearful of change may envision a dystopian world where robots and computers take over, robbing all opportunity for authentic human connection. The future likely lies somewhere in the middle. What we should be aspiring for is a word few know: protopia. Coined by Kevin Kelly, a protopia means progressing incrementally as a society with each innovation building on its precursors. Given how protopia is about being better than yesterday, “protopia is much much harder to visualize. Because a protopia contains as many new problems as new benefits, this complex interaction of working and broken is very hard to predict.” Despite the exponential nature of technological growth in recent years pointing otherwise, we don’t seem to be heading toward a dystopian or utopian future just yet. We can aspire for protopia by recognizing the adjacent possible, a concept Steven Johnson describes in his book, Where Good Ideas Come From, as “a story of one door leading to another door, exploring the palace one room at a time.” Acknowledging the multitude of doors every innovation opens is crucial as we test out GPTs’ cutting-edge capabilities while attempting to prevent adverse impacts.

A realistic solution for this reality

Innovating with humane holistic design

We’ve established how we cannot afford to fix errors after going to market with today’s speed of deployment and adoption as well as the importance of exploring uncertainties in addition to risks. But how do we actually innovate? Over the last 30 years, we have answered that question by putting humans at the center. Human-centered design (HCD) was revolutionary for developing empathy-driven frameworks that help understand customer needs and how to fulfil them while orchestrating the best experience possible. Although HCD has been instrumental in allowing us to address pain points and differentiate our offerings, we must upgrade our innovation tools to meet the demands of our ever faster and more globally connected world. It is no longer enough to put individual humans at the center—we must focus on the whole of humanity. When we make the shift from human-centered design to humane holistic design (HHD), we consider the linear domino effect and incorporate complexity and butterfly effects of how our inventions will impact the environment and society. And with A.I.’s and future GPT’s incredible potential for reach, innovating with HHD should be considered a non-negotiable.

How we get there

Responsible technology and purposeful innovation

But what does it mean to lead responsible technology? Well, it starts by combining everything we’ve discussed. It entails prioritizing ethics in the creation, development, and deployment of innovation. It requires thinking not just from a risk perspective, but also from a possibility perspective in reckoning with uncertainty. And it is executed through HHD, considering the variety of ecosystems a technology, product, or service touches.

Tying innovation to organizational purpose is the key to implementing ethical standards at each step.

Although most organizations surely aspire for responsible technology, the reality of swift adoption, effect magnification, and a broken innovation philosophy are standing in their way. Fortunately, the solution lies in Purpose. Tying innovation to organizational Purpose is the key to implementing ethical standards at each step. Just as every organization needs a Why for existing, they also need a Why for innovating. When we align the two, we will create an output with a clear and compelling intention that is also authentic to the company. Although this will look different for a business that’s been a tech trailblazer vs. one that’s just beginning to explore digital, they both must leverage their unique strengths with the need in the world they are trying to solve through innovation. If leaders are dedicated to creating a culture in their organization with a strong purpose, vision, mission, and values, responsible innovation should work hand-in-hand with all these components, too.

For example, if an organization values equity, they will want to innovate in a way that considers the unintended consequences for access. If a company’s vision involves a sustainability goal, they will want to ensure their innovations are as ecofriendly as possible across the entire value chain. Connecting all these pieces is the new competitive advantage.

Tricia Wang also notes how “Purpose at the end of the day helps you come back to the boardroom and say, ‘this is a really tough situation that we just encountered and can’t predict every situation.’ Purpose will help you align, figure out how to make the best decision, and to navigate those tensions.” Purpose will ground business leaders’ choices for the future. Putting forethought into the reason behind our innovation efforts also minimizes risk and manages uncertainty, so responsible technology will be less susceptible to systemic failure. In addition to having higher trust in organizations that don’t suffer reputational damage due to innovation mishaps, consumers and employees are more loyal to companies with a strong sense of Purpose.

If you want to win the 2020s, you’ve got to get ahead of 2030

Traditionally, organizations that prioritize innovation will outcompete in the marketplace; however, the How and Why behind innovation are becoming just as important as the What. The positive reception of Microsoft’s accessibility championing and the Monk Skin Tone feature in Google new Pixel phone to make for more representative images are two examples of companies infusing “why” into their (successful) innovation strategies. Scientist, entrepreneur, and emotional AI thought leader Dr. Rana el Kaliouby predicts that “technology in the future is going to interface with us in just the way we interact with one another, through conversation, through perception, and empathy.” She reminds us that “innovation isn’t just about building better human machines,” but rather “about being better humans and helping humans.” Innovation helps us become better by first dreaming up what better could be. We must introduce new ways of thinking and improve our innovation techniques so we can get ahead of the dark side of GPTs and enjoy discovering the abundance of ways these inventions can positively influence humankind.

Visuals created by Catalina Lotero, Director of Design BCG BrightHouse along with Zay Cardona, Illustrator.